Celestial AI -- silicon photonics and the "memory wall"

Of the many companies seeking to solve the challenge of the "memory wall" resulting from the rise in complex AI workloads and data-intensive applications, Celestial AI shows real promise.

Welcome to the first edition of NextUp! This week we’ll investigate a compelling series C company out of Santa Clara, California – Celestial AI.

Summary:

Celestial AI is pioneering a transformative technology in AI computing with its proprietary Photonic Fabric, an optical interconnect platform that redefines the limits of speed, energy efficiency, and scalability for data-intensive AI workloads. Traditional electrical interconnect computing architectures face significant constraints in bandwidth and latency, particularly as the demand for AI-driven applications accelerates across industries. By leveraging light for data transfer, Celestial AI is addressing these bottlenecks, unlocking new levels of computational performance. For this reason, Celestial AI is uniquely positioned to become a foundational infrastructure provider for the next generation of AI applications.

Why now?

High data volume and velocity requirements: Global data generation and consumption is growing at an exponential rate and it’s being driven by edge computing, real-time data analysis, and AI advancements.

Performance driven AI infrastructure needs: Traditional electrical interconnects struggle with AI workloads due to limitations in bandwidth, latency, and power consumption. Photonic solutions offer a compelling alternative.

Cost and energy efficiency needs: As hyperscalers and enterprises seek to control costs and reduce their carbon footprint in response to substantial growth in workload and data volume, Celestial AI’s energy-efficient architecture is well tailored to solve both challenges.

Product & Technology:

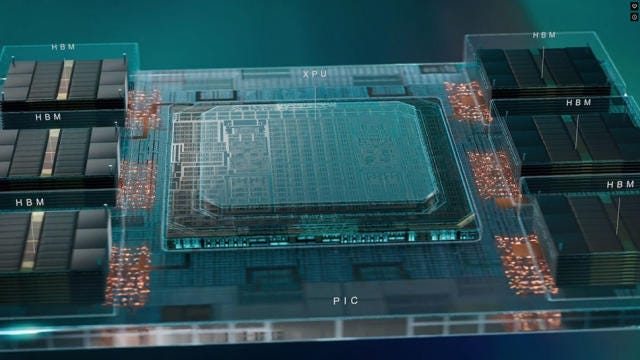

Celestial AI’s Photonic Fabric technology uses optical interconnects to transmit data as light rather than electricity. This approach provides the following benefits:

25x greater bandwidth at 10x lower latency than competing optical interconnect solutions.

Overcomes the limitations of Co-packaged Optics (CPO) by delivering optical signals directly to any point on the compute die rather than to the edge only.

Supports current HBM3E and next-generation HMB4E bandwidth and latency requirements at very low single-digit pJ/bit power.

How it works:

At its core, the Photonic Fabric uses light instead of electricity to transmit data, which allows for significantly higher bandwidth and lower latency compared to traditional electronic interconnects.

The key innovation of the Photonic Fabric lies in its ability to deliver optically encoded data directly to any location on the compute die, not just to the edge of the processor as in conventional co-packaged optics (CPO) approaches. This is achieved through a proprietary silicon photonic modulation technology that is approximately 60 times more thermally stable than traditional ring resonators used in other optical interconnects.

By keeping data in the transport-efficient optical domain for as long as possible, the Photonic Fabric reduces latency and power consumption. The technology includes not only modulators but also other photonic components and sophisticated 4-to-5-nm control electronics, forming a complete architecture for high-speed, low-power data transmission.

The Photonic Fabric enables direct compute-to-compute and compute-to-memory links, which Celestial AI claims can deliver 25 times greater bandwidth at 10 times lower latency than competing optical interconnect alternatives. This performance improvement is particularly crucial for AI workloads that require massive data movement between compute and memory resources.

One of the primary goals of the Photonic Fabric is to address the "Memory Wall" challenge in AI computing. By increasing the addressable memory capacity of each processor at high bandwidth, the technology allows for the creation of high-capacity, high-bandwidth, pooled memory systems interconnected by optics. This approach has the potential to noticeably reduce the number of processors needed for large AI models, improving overall system efficiency and greatly reducing operational costs.

The Photonic Fabric is designed to be compatible with current HBM3E and next-generation HBM4E bandwidth and latency requirements while operating at very low single-digit pJ/bit power. It can be integrated as optical chiplets in existing multi-chip packaging flows without requiring software changes, making it easier for customers to adopt the technology.

Market Opportunity:

The global AI infrastructure market, valued somewhere between $43 billion and $55 billion USD in 2024, is expected to grow to around $250 - $310 billion USD by 2030 at a CAGR of roughly 26% - 30% according to various analyst reports. The global semiconductor memory market was already valued around $100 billion USD in 2023 and is expected to grow to around $200 billion by 2033 at a CAGR of roughly 7% according to various analyst reports.

Optical interconnect technologies like the Photonic Fabric are poised to capture a sizable portion of this growth not only because of the critical nature of memory in practically every computing application, but specifically the growing need for high-bandwidth, low-latency, scalable memory that can meet the requirements of modern data intensive applications and AI use cases.

Celestial AI’s primary target customers are the four major hyperscalers: Amazon, Google, Microsoft, and Meta Platforms. Together these four players make up roughly 70% of the datacenter infrastructure market. The company has already publicly expressed that deep collaborations with hyperscale datacenter customers focused on optimizing system-level accelerated computing architectures are a prerequisite for their solutions, and that they’re excited to be working with the giants of the industry to propel commercialization of the Photonic Fabric. By turning these major players into customers, Celestial AI will quickly find themselves with a strong advantage in market share over competitors and new challengers.

Additional target customers include:

AI computing companies that are developing AI accelerators and other specialized memory-dependent AI hardware

Memory manufacturers looking to integrate advanced interconnect technologies

Semiconductor companies aiming to integrate optical interconnect solutions to advance their existing compute platforms (CPU, GPU, ASICs)

Organizations working to develop and maintain advanced AI models like LLMs, recommendation engines, or other data-intensive applications

High performance computing entities such as research institutions or companies dealing with complex simulations or data analysis, requiring high-bandwidth, low-latency computing solutions

Celestial AI’s Photonic Fabric has potential applications across practically any industry vertical that expects to capitalize on vast amounts of data or increase AI adoption in the near future:

Financial services: Use cases like high-frequency trading and algorithmic AI, risk analysis, and data analysis where processing speed, capacity, and latency are critical.

Healthcare: The global AI market size for healthcare is expected to reach nearly $200 billion USD by 2030, covering use cases like disease diagnosis, genome analysis, medical robotics, and remote patient monitoring.

Insurance: AI within the insurance industry alone is forecast to be worth around $35 billion USD by 2030 with major use cases involving claims processing, underwriting, marketing, and administration

Automotive: Use cases like autonomous vehicles are incredibly data dependent, capturing millions of data points from sensors, cameras, and IoT devices. Processing this information in real-time to make decisions requires ultra high-bandwidth and low-latency.

Manufacturing: Similar to the automotive industry, automation in manufacturing operations is heavily dependent on millions of data points and real-time processing.

With a growing reliance on real-time, high volume data processing and the expansion of larger, more sophisticated AI models, Celestial AI’s Photonic Fabric addresses a critical infrastructure bottleneck, giving the company a robust growth trajectory across industries.

Commercialization Strategy and Revenue Streams:

Celestial AI is pursuing multiple avenues to bring their technology to market:

Technology licensing: Customers can choose to harness Celestial AI’s IP by licensing the technology and leveraging the Photonic Fabric as an extension of their existing compute platforms (CPUs, GPUs, ASICs). In such cases, the technology can be integrated as optical chiplets in existing multi-chip packaging flows without requiring software changes, making it easier for customers to adopt the technology.

End-to-end solutions: Customers can leverage the full end-to-end benefits of the Photonic Fabric with Celestial AI’s own proprietary Orion AI accelerator.

Collaborations and partnerships: Celestial AI is cultivating an ecosystem around the Photonic Fabric, partnering with companies like Broadcom for custom silicon design and Samsung for memory solutions. This collaborative approach aims to accelerate the adoption of the technology in emerging AI infrastructure, potentially leading to more efficient and powerful AI systems in the near future.

Given the company’s commercialization strategy there are several potential revenue streams they can take advantage of, which include:

Licensing fees around the Photonic Fabric IP and related proprietary technologies

Hardware sales from the end-to-end solution featuring the Orion AI accelerator

Recurring revenue from ongoing support, maintenance, and optimization contracts

Joint development projects with collaborators and strategic partners

Royalty agreements

Competitive Landscape:

The silicon photonics space has grown highly competitive as of late. With the proliferation of large, complex generative AI models and the increasing adoption of AI within the enterprise and consumer spaces, much attention has been paid towards underlying hardware advancements.

Amongst Celestial AI are competitors like Lightmatter and Ayar labs. Each player in the space is building on the same core idea of leveraging optics for data transfer over traditional electronic signals, however, each player is also applying that core principle in different ways.

Lightmatter is known for their two flagship products: Envise and Passage. Envise is an AI accelerator that combines photonics, electronics, and novel algorithms in a single module. It essentially uses different colors of light to run computations on a single piece of hardware, which allows for considerably fast processing speeds. Passage is an optical interposer technology that excels in interconnecting chiplets. An interposer is essentially a bridge that allows integrated circuit components that wouldn't normally fit together to be connected and communicate with each other. It can connect up to 48 tiles on a single interposer and enable up to 768 Terabit per/sec communication between tiles. Passage is valuable for its dynamically reconfigurable fabric, and excels at shorter distance in-package communication.

Ayar labs’ flagship product is an in-package optical I/O technology called TeraPHY, which facilitates communication between chips at lower power consumption and latency than traditional electrical I/O technologies. TeraPHY is designed to replace copper-based connections, offering up to 100x higher bandwidth density. In addition to TeraPHY, Ayar Labs has also developed the SuperNova light source, an external laser source that powers their TeraPHY chiplets, ensuring high performance and reliability for optical data transmission. Together, TeraPHY and SuperNova, like Lightmatter’s Passage, excel at in-package communication but can also facilitate longer distance off-package communication as well.

Against this competition, Celestial AI possesses 3 key competitive advantages. First, is the Photonic Fabric’s ability to deliver optical signals directly to any point on the compute die, which is a unique capability in the market. This contrasts with the beachfront architecture of most optical-interconnect approaches, where optical-to-electrical conversion occurs at the boundaries of GPUs or ASICs, leading to low bandwidth. Overcoming this “beachfront challenge” has the added benefit of creating more room around GPUs and ASICs for additional HBM (High Bandwidth Memory), leading to more space efficient architectures. This increases the ROI of integrating the Photonic Fabric over other optical interconnect technologies, positioning it as a highly compelling solution to potential customers.

Second, is Celestial AI’s vision for disaggregating memory from compute and enabling high-capacity, high-bandwidth, pooled memory systems interconnected by optics. This isn’t a new idea in the photonics space, but Celestial AI is best poised to deliver out of the bunch given their capability for direct to compute optical delivery and their impressive off-pacakge bandwidth and latency characteristics. If the company can execute on this vision, it would mean memory and compute could be scaled independently, and also that HBM could be moved further away from the actual processor without sacrificing performance. This could lead to effectively limitless memory as current memory capacity has remained constrained by the need to minimize physical distance between HBM and processors for the sake of latency. The efficiency gains in this scenario would have a dramatic impact on the overall computing landscape, driving down costs for hyperscalers and enterprises as well as providing significantly more horsepower to data hungry AI applications.

Third, is the strength of Celestial AI’s IP portfolio, which recently grew to over 200 patents with the acquisition of the Rockley Photonics portfolio. The addition fits well into the existing Photonic Fabric portfolio, spanning advanced packaging, thermally stable silicon photonics, and system architectures for optical compute interconnect. This combined with their Tier-1 partner ecosystem of custom silicon/ASIC design services such as Broadcom, system integrators, and HBM & packaging suppliers such as Samsung, puts the company in a great position to capitalize on future innovations.

Risks and Mitigations:

Execution Risk: Complex technology development and integration challenges.

Mitigation: Experienced team, strategic partnerships, and iterative development approach.Competition: Other companies working on optical interconnect solutions.

Mitigation: Strong IP position, significant performance advantages, and first-mover advantage with key customers.Adoption Timeline: Potential delays in industry-wide adoption of new interconnect technologies.

Mitigation: Compatibility with existing standards (CXL, PCIe, UCIe, JEDEC HBM) to ease integration.Capital Intensity: Ongoing R&D and commercialization costs.

Mitigation: Recent $175M funding provides runway and potential for strategic investments or partnerships.

Team:

Celestial AI’s leadership team is a compelling group of industry veterans. Dan Lazovsky, founder and CEO, formerly managed over $1 billion in semiconductor manufacturing equipment business at Applied Materials before founding his first startup, Intermolecular, and leading it through its IPO.

Preet Virk, the company’s COO, brings deep technical knowledge from managing engineering teams in the semiconductor and data communications sectors. He previously served as SVP at MACOM and Mindspeed Technologies where he drove the initial adoption of electro-optical solutions enabling 100G transceiver deployment datacenters.

Celestial AI’s CTO, Phil Winterbottom, brings expertise that spans across photonics, AI / machine learning hardware and software, and ASIC system architectures. Following his 10+ years at Bell Labs, he served as VP of Engineering at an optical AI computing startup, and co-founded and led the technical teams at Entrisphere (acquired by Ericsson) and Gainspeed (acquired by Nokia).

Investors and Funding:

Celestial AI’s latest Series C round ($175 million, March 2024) featured U.S. Innovative Technology Fund (USIT), AMD Ventures, Koch Disruptive Technologies (KDT), Temasek, Xora Innovation (Temasek's subsidiary), IAG Capital Partners, Samsung Catalyst, Smart Global Holdings (SGH), Porsche Automobil Holding SE, Engine Ventures (spun out of MIT), M-Ventures, and Tyche Partners. Earlier rounds featured Engine Ventures, Imec Xpand, and Fitz Gate (venture capital investor in the Princeton University ecosystem).

The company boasts a diverse range of investors from corporate venture arms like AMD and Samsung, technology focused funds like USIT and KDT, and global investment firms like Temasek. A few investors stick out as particularly strong strategic partners such as AMD Ventures and Samsung Catalyst, which could lead to valuable collaborations in the semiconductor and AI hardware space in the futre. Repeat investors like KDT, Temasek/Xora, Samsung Catalyst, and Tyche Partners have participated in multiple rounds, indicating continued confidence in the company. The involvement of investors like Thomas Tull's USIT and Koch Industries' KDT also suggests strong interest from prominent figures in the technology investment space, showing further positive signals.

To date, Celestial AI has raised $338.9M USD across their angel, seed, series A, series B, and series C rounds.

Future predictions:

Continued growth and expansion: The company has already announced that their latest funding round will enable multiple large scale customer collaborations focused on commercializing the Photonic Fabric platform. As this motion gains momentum, their tier-1 ecosystem and supply chain partnerships, featuring established players like Braodcom and Samsung, will prove especially valuable as the company moves beyond the commercialization stage and more firmly into scaling its operations and expanding its customer base. With an aggressive go to market strategy targeting major players within the datacenter infrastructure, computer memory, and semiconductor markets, each potential customer conversion will propel the company ahead of competitors by considerable margins as customers of this size are limited and potential contract sizes are large. With the speed of innovation in the AI software space steadily ramping up, the need for innovative solutions like Celestial AI’s Photonic Fabric will only increase, leading to consistent growth in the size of the current market opportunity.

Potential IPO: Celestial AI’s competitor, Lightmatter, pushed its valuation over the $1 billion USD mark during a series C round in December of 2023, moving the company into unicorn territory. With benchmarks like these available from close competitors, it demonstrates there is a high potential ceiling for Celestial AI. Reaching similar valuation numbers will require continued growth and expansion, however, given the key competitive advantages of the company, it will be more a matter of execution than innovation. Additionally, given the quality of firms invested in the company already, the expertise and guidance necessary to facilitate a successful IPO is readily available. Lastly, the open and flexible characteristics of Celestial AI’s platform approach to memory and interconnect bandwidth technology means there is significant room to diversify their product suite and attack different industries, markets, and use cases. This breadth leaves room for the company to grow beyond the scope of just memory technology for AI/ML and HPC, leading to long term growth potential and expansion opportunities post-IPO.

Acquisition target: Celestial AI becoming the target of an Acquisition by either a semiconductor or memory manufacturer, or by a hyperscaler cloud service provider (CSP) is a considerably likely outcome in the case of the company’s continued growth and success. An acquisition like this for manufacturers or CSPs would provide plenty of business value as it would bring a critical part of the infrastructure supply chain in house, reducing costs. Additionally, given the company’s valuable technology, strong IP portfolio, and potentially strong market position in the near future, an acquisition by the right buyer would help a company remain competitive in the highly contentious datacenter and AI infrastructure space.